Encrypted-messaging app Telegram suffered a massive data leak that exposed the personal data of millions of users. A rival to

Telegram Data Leak Exposes Millions of Records on Darknet on Latest Hacking News.

Encrypted-messaging app Telegram suffered a massive data leak that exposed the personal data of millions of users. A rival to

Telegram Data Leak Exposes Millions of Records on Darknet on Latest Hacking News.

Canada's government services hit by cyberattacks – Vishing attacks surge amid COVID-19 pandemic – DDoS extortionists strike again

The post Week in security with Tony Anscombe appeared first on WeLiveSecurity

Human beings have always loved traveling and exploring new places. What started off as a nomadic lifetime has over generations

A Quick Guide to Digital Tourism and Destination Marketing on Latest Hacking News.

In this article, I’ll introduce Mastodon, a social media platform founded in the spirit of a decentralized Internet. A decentralized web has plenty of challenges and is not necessarily for the faint-hearted, but there are good reasons to persevere.

On July 15, 2020, one of the biggest scams in Twitter’s history happened. Through intelligent social engineering, a group of people managed to gain access to Twitter’s administrative tools, allowing them to post tweets directly from several high-profile accounts.

More than 130 influential Twitter accounts were hacked. In a matter of minutes, the profiles of Apple, Bill Gates, Elon Musk, and others asked individuals to send Bitcoin to a cryptocurrency wallet, with the promise that the money sent would be doubled and returned as a charitable gesture.

Within minutes of the initial tweets, more than 300 transactions had already taken place — to a value of over US$180,000 — before Twitter took the scam messages down.

With a centralized structure in place, only a single person with administrative rights needed to be tricked into giving out access to these high-profile accounts. In similar circumstances, someone with a more sinister motive might aim at a stock market crash, fabricated political tension, or even global unrest.

Centralization is a double-edged sword. Its core idea is based on the storage, ownership, and protection of your data by a social media platform. While this sounds awful, there are benefits. The first and strongest advantage of centralized platforms is their ease of use. For example, when you forget your password or your account gets hacked, platforms with a centralized structure can recover them with ease, since they store all of your data on their servers.

But this sense of protection comes at a price. Your data — like tweets, retweets, likes, and shares — are stored and owned by a corporation. These companies have yearly financial KPIs, and your data is a great way for them to generate money, primarily when used for targeted advertisements. Another downside to such platforms is that they’re not open source, meaning there’s no transparency between the users and the platform they’re on. As a user, you have no idea what’s happening under the hood or how your data is being handled.

So what if there was a platform with a decentralized structure, where you owned what you posted and you could see what your data was being used for? For a long time, people have tried to create such platforms — App.net, Peach, Diaspora and Ello are a few of the better-known examples.

But the latest pioneer in decentralized social media comes with all of the benefits listed above.

Mastodon was released in 2016. In the eyes of many, this network is the first step in social media decentralization. While similar to Twitter in both appearance and features, Mastodon focuses on the safety and privacy of its user base by being decentralized and federated. All of its data are distributed across a vast number of independent servers, known as “instances”. Each instance has its own terms of service, code of conduct, and moderation policies while working seamlessly together with other servers as a federating network.

The founder of Mastodon, Eugen Rochko, explains that the platform works very similarly to email. Email users can easily connect, even if one person uses Gmail and the other uses Outlook. The same applies to Mastodon and its instances. While users have the freedom to interact with a vast number of instances, each instance can also block content from other servers with policies or content they’re against, without losing access to the entire Mastodon network.

Getting started with Mastodon is not as easy as joining Facebook or Twitter. Since the platform is a federated service, very similar to email, Mastodon lets you sign up to many sites that run the Mastodon client, similar to how you create an email account on services like Gmail, Hotmail or Protonmail.

Continue reading Mastodon: A Federated Answer to Social Media Centralization on SitePoint.

Website speed refers to how fast a site responds to requests from the Web. As I am about to highlight in this article, the speed with which a website load is very critical to both the users and owners.

Users often get frustrated whenever we are unable to open a web page. Sites that take longer to load put off users, and they hardly get much viewership. Studies show that 40% of Internet users do not wait for a site to load more than three seconds.

In such a case, the site losses traffic to competition. Slow pages also give a bad image to brands as users associate site speed with its quality and services.

Since 2010, Google has been using site speed in their algorithm for ranking web pages. Website speed remains an important factor for Web page design whenever we are working on Search engine optimization.

Mobile-friendly pages are essential as more than half of Internet users are on the mobile internet. When working on a website, always think mobile as compared to desktop. The good news is that most optimization practices apply equally to both mobile and desktop Internet.

Here are a few simple tips to help make your site mobile fast.

Web search involves browsing through remote web pages in servers. The speed of the remote computer determines the ease with which a webpage requested is accessed.

For this reason, the choice of hosting service provider is significant. There are plenty of great choices when it comes to high-speed, secure and affordable web hosting.

What makes it so great is that even with little experience in web designs, the team provides the best quality SEO results.

For a server to display a single page, it executes thousands of interrelated codes that take time to load. Slow web hosting servers are at a disadvantage as search engines give priority to the fastest pages.

With the announcement of Mobilegeddon in 2015, Google started boosting mobile-friendly sites. The Mobilegeddon is Google’s updated search engine algorithm.

The application of a responsive design means that the site dynamically changes depending on how it is accessed (through desktop or mobile). The users accessing the site, in this case, view it differently on either device.

Google recommends dynamic designs over mobile-only as they load faster. The responsive designs also offer a huge SEO advantage. With this design, we are more likely to enjoy a wider social share generated traffic.

To achieve this, you should consider the performance, design, and content to ensure satisfaction in usability. The sites adapt layout using CSS3media queries, flexible images, proportion-based grids, and fluid grids.

The fluid grid concept calls for the use of relative units in image sizing, such as percentages, discouraging the use of absolute measures such as pixels.

Size flexible images in relative units to avoid over-spilling of images outside their containing element.

The use of media queries allows for the use of different CSS style rules, which are linked to the characteristics of the device used to access the site (For instance, the width of the physical display).

The responsive design aims at automated adjustment on different screen sizes, be it a mobile phone, tablet, laptop, or desktop.

As you strive to make your sites captivating to the eye, we should see that the site isn’t too weighty, lowering the loading speed. The more we add to a site, the higher the codes involved, and the professional term for such is code bloat. The most likely cause is when a designer is overly focused on the appearance of the site.

To reduce the weight of a site, we apply the following strategies.

We should always keep the site simple to reduce the number of codes used. As we saw earlier, the codes on a site affect the loading speed. A mobile screen can easily get overcrowded.

To achieve a simpler design, I recommend using a single call to action per page and eye-catching simpler designs. In keeping your site simple, we also recommend simple and shorter steps for users. It does not only make the site lighter but also offers a more friendly user experience.

I advise for the use of fewer photos and images and less color. Good quality images on a site are a plus.

However, the more images you include, the weightier the site becomes. Ideally, images make up for 63%of the weight of a page. While cutting down on the images, we also reduce the file size by compressing the images. When we compress an image, it becomes smaller with a little compromise on the quality.

To reduce the weight of a site, avoid the use of custom fonts, which are weighty in terms of codes. Custom codes employ lots of CSS and Javascript. I advise for the absolute minimization of custom codes to necessary instances.

We minify codes to speed up the loading speed of a web page. The process entails the streaming of codes by removing unnecessary characters for code execution. In code minification, we get rid of

When viewers visit a site, they see above the fold content first. To speed up a mobile website, we avoid loading the whole page by capitalizing on lazy loading. The techniques allow the loading of content as required with the utmost speed.

The use of too many redirects weighs heavily on sites. Redirects are an excellent SEO strategy. However, I recommend the limitation on the number of redirects applied on a single site.

When users click to view a link, the browser ideally opens the page on that particular link directly from the server. However, if redirects are involved, the browser has to go through different pages to locate the page location.

The internet is more competitive than the real world, and mobile internet use is on the rise. The speed of mobile sites becomes critical in search engine optimization. As we saw above, certain areas can be limiting the speed of mobile sites reducing our access to the desired traffic.

The post 9 Ways to Speed Up Mobile Website appeared first on The Crazy Programmer.

The extortionists attempt to scare the targets into paying by claiming to represent some of the world’s most notorious APT groups

The post DDoS extortion campaign targets financial firms, retailers appeared first on WeLiveSecurity

“Interoperability” is the act of making a new product or service work with an existing product or service: modern civilization depends on the standards and practices that allow you to put any dish into a dishwasher or any USB charger into any car’s cigarette lighter.

But interoperability is just the ante. For a really competitive, innovative, dynamic marketplace, you need adversarial interoperability: that’s when you create a new product or service that plugs into the existing ones without the permission of the companies that make them. Think of third-party printer ink, alternative app stores, or independent repair shops that use compatible parts from rival manufacturers to fix your car or your phone or your tractor.

Adversarial interoperability was once the driver of tech’s dynamic marketplace, where the biggest firms could go from top of the heap to scrap metal in an eyeblink, where tiny startups could topple dominant companies before they even knew what hit them.

But the current crop of Big Tech companies has secured laws, regulations, and court decisions that have dramatically restricted adversarial interoperability. From the flurry of absurd software patents that the US Patent and Trademark Office granted in the dark years between the first software patents and the Alice decision to the growing use of “digital rights management” to create legal obligations to use the products you purchase in ways that benefit shareholders at your expense, Big Tech climbed the adversarial ladder and then pulled it up behind them.

That can and should change. As Big Tech grows ever more concentrated, restoring adversarial interoperability must be a piece of the solution to that concentration: making big companies smaller makes their mistakes less consequential, and it deprives them of the monopoly profits they rely on to lobby for rules that make competing with them even harder.

For months, we have written about the history, theory, and practice of adversarial interoperability. This page rounds up our writing on the subject in one convenient resource that you can send your friends, Members of Congress, teachers, investors, and bosses as we all struggle to figure out how to re-decentralize the Internet and spread decision-making power around to millions of individuals and firms, rather than the executives of a handful of tech giants.

(Republished from EFF Deeplinks under a Creative Commons Attribution 4.0 license)

Continue reading Adversarial Interoperability on SitePoint.

In this article, we’ll explore Windows Terminal, the ideal accompaniment to WSL2. It’s fast, configurable, looks great, and offers all the benefits of both Windows and Linux development.

Windows has fully embraced Linux, and WSL2 makes it a seamless pleasure.

Your distro’s terminal can be accessed by:

wsl or bash at a Powershell or command prompt%windir%\system32\bash.exe ~Windows Terminal offers a further choice, but you won’t regret installing it. The new application features:

The easiest way to install Windows Terminal is via the Microsoft Store:

ms-windows-store://pdp/?ProductId=9n0dx20hk701

If this link fails, try opening the Microsoft Store in your browser or launching the Microsoft Store app from the Windows Start menu and searching for “Terminal”.

Note: be careful not to select the earlier “Windows Terminal Preview” application.

Hit Get and wait a few seconds for installation to complete.

If you don’t have access to the Store, you can download the latest release from GitHub.

The Windows Terminal app icon is now available in the Windows Start menu. For easier access, right-click the icon and choose Pin to Start or More, followed by Pin to taskbar.

When it’s first run, Windows Terminal starts with Powershell as the default profile. A drop-down menu is available to launch other tabs and access the settings:

Terminal automatically generates profiles for all WSL distros and Windows shells you have installed, although it’s possible to disable generation in the global settings.

Open a new tab for the default profile by clicking the + icon or Ctrl + Shift + T. To open a tab for a different profile, choose it from the drop-down menu or press Ctrl + Shift + N, where N is the profile’s number.

Press Alt + Shift + D to duplicate and split the pane. The active pane is split in two along the longest axis each time it is used:

To force creation of a:

To open another profile in a new pane, hold down the Alt key when choosing it from the drop-down menu.

Hold down Alt and use the cursor keys to switch between active panes from the keyboard. The size of a pane can be adjusted by holding Alt + Shift and using the cursor keys to resize accordingly.

Tabs can be renamed by double-clicking the text. You can also change the name or color by right-clicking the tab and choosing a menu option:

This only affects the current tab; it doesn’t permanently change the profile.

To close the active pane or tab, press Alt + Shift + W or enter the terminal’s standard exit command (usually exit).

The text size of the active terminal can be resized with Ctrl + + and Ctrl + -. Alternatively, hold down Ctrl and scroll the mouse wheel.

Use the scroll bar to navigate through the terminal output. Alternatively, hold down Ctrl and press cursor up, cursor down, Page Up or Page Down to navigate using the keyboard.

Press Ctrl + Shift + F to open the search box:

Enter any term then use the up and down icons to search the terminal output. Click the Aa icon to activate and deactivate exact-case matching.

By default, copy and paste are bound to Ctrl + Shift + C and Ctrl + Shift + V respectively, although Ctrl + C and Ctrl + V will also work.

Note: be wary that Ctrl + C can terminate a Linux application, so using Shift is advisable.

An automatic copy-on-select option is available in the global settings, and you can also paste the current clipboard item by right-clicking the mouse.

Settings are accessed from the drop-down menu or Ctrl + , (comma). The configuration is defined in a single settings.json file, so you may be prompted to choose a text editor. VS Code is a great choice, although Notepad is fine if you’re happy to edit without color-coding and syntax checking.

settings.json controls:

The file uses the following format:

// This file was initially generated by Windows Terminal

{

// general settings, e.g.

"initialRows": 40,

// profile settings

"profiles":

{

"defaults":

{

// settings that apply to all profiles

}

"list":

[

// list of individual profiles, e.g.

{

"guid": "{81d1dceb-c123-5678-90a1-123abc456def}",

"name": "Windows PowerShell",

"commandline": "powershell.exe"

},

{

"guid": "{91d1dceb-c123-5678-90a1-123abc456def}",

"name": "Ubuntu",

"source": "Windows.Terminal.Wsl"

}

]

}

// custom color schemes, e.g.

"schemes": [

{

"name": "My new theme",

"cursorColor": "#FFFFFF",

"selectionBackground": "#FFFFFF",

"background" : "#0C0C0C",

"foreground" : "#CCCCCC"

}

],

// custom key bindings, e.g.

"keybindings:

[

{ "command": "find", "keys": "ctrl+shift+f" }

]

}

Defaults are defined in defaults.json. Open it by holding down Alt when clicking Settings in the drop-down menu.

Warning: do not change the defaults file! Use it to view default settings and, where necessary, add or change a setting in settings.json.

The following global settings are the most useful, although more are documented in the Windows Terminal documentation.

"defaultProfile" defines the GUID of the profile used as the default when Windows Terminal is launched.

Set "copyOnSelect" to true to automatically copy selected text to your clipboard without having to press Ctrl + Shift + C.

Set "copyFormatting" to false to just copy plain text without any styling. (I wish this were the default for every application everywhere!)

Set "initialColumns" and "initialRows" to the number of characters for the horizontal and vertical dimensions.

"tabWidthMode" can be set to:

"equal": each tab is the same width (the default)"titleLength": each tab is set to the width of its title, or"compact": inactive tabs shrink to the width of their icon."disabledProfileSources" sets an array which prevents profiles being automatically generated. For example:

"disabledProfileSources": [

"Windows.Terminal.Wsl",

"Windows.Terminal.Azure",

"Windows.Terminal.PowershellCore"

],

This would disable all generated profiles; remove whichever ones you want to retain.

Continue reading The Complete Guide to Windows Terminal on SitePoint.

The issue of VPS security is a topic of concern, even for established website owners. As a novice, remember that

How to Keep Your VPS Based Project Secured on Latest Hacking News.

Google has recently released the stable version of Chrome 85 with numerous updates and a serious bug fix. Exploiting this

Google Patched Serious Code Execution Bug With Chrome 85 on Latest Hacking News.

Chrome gets a new way of managing tabs while Firefox now features a new add-ons blocklist

The post New Chrome, Firefox versions fix security bugs, bring productivity features appeared first on WeLiveSecurity

Introduction to E-commerce fraud prevention tools Today the E-Commerce industry is on the rise. With the unfortunate situation with the

10 Most Effective E-Commerce Fraud Detection Tools in 2020 on Latest Hacking News.

Introduction With Microsoft eyeing up to purchase firms specializing in Robotic Processes Automation (RPA), the industry’s promise as well as more mainstream

The Ins and Outs of RPA Technology on Latest Hacking News.

Remote work has become increasingly common in the wake of COVID-19. The need for employees to stay home from the

Managing the Insider Threat of Remote Workers on Latest Hacking News.

Bitcoin Bitcoin is a cryptocurrency, form of digital currency. It is not an official government currency nor any other banks.

Best Bitcoin Practice for New Users on Latest Hacking News.

Bitcoin is a digital currency also knows as crypto-currency, which was created to facilitate and speed-up transactions across borders. This

Bitcoin vs. Other Cryptocurrencies on Latest Hacking News.

A researcher has recently disclosed a bug publicly that affects the Safari browser after Apple attempted to delay the fix.

Safari Bug That Allows Stealing Data Disclosed After Apple Delays A Patch on Latest Hacking News.

The world’s largest cruise operator Carnival Corporation has reported a security incident. As revealed, Carnival suffered a ransomware attack that

Carnival Corporation Cruise Operator Suffered Ransomware Attack on Latest Hacking News.

Cybercriminals increasingly take aim at teleworkers, setting up malicious duplicates of companies' internal VPN login pages

The post FBI, CISA warn of spike in vishing attacks appeared first on WeLiveSecurity

For a long time, exchanging information between two people in different locations has always been central to support relationships in social and business environments. Exchange of information between parties is itself a business on its kind, and the faster the speed to relay information, the higher the efficiency of that communication method. Methods of communication have grown rapidly supported by the extensive development of technology and the spread of the internet.

The most significant feature of the chat application is their ability to relay information immediately the information is sent. The data to be transmitted can be as complex as a video or an image or a simple as a 2 letter word. Modern versions of many chat applications can support voice and video calls, text messages, emotion icons, among other types of messages.

This is an article giving a brief explanation of how real time chat API work to relay information between users.

We begin by understanding the major components that support the operations of a chat application.

Main Components That Support the Functioning of a Chat Application

There are three main three operating components required for a chat application to function as intended; they include:

This is the part of the chat system that is accessed by the user of the application from a mobile phone or a personal computer. The messaging application marks the start of the user interface and is usually designed with features to allow the user to type, edit, and send a message.

To support interaction, the messaging application should offer a text box for the user to compose a text message. The text box typically has a “Send” or “Enter” buttons to initiate the sending of the written message. The user should compose other types of messages using emotion icons and stickers within the messaging application.

The messaging application must connect to a server for it to function as intended. A server may be a physical or virtual machine automated/programmed to receive and share messages between users who are connected to the server. The user’s connection to a server may be through a computer or a mobile application such as a real time chat API.

Servers come in different types, sizes, and work efficiency but receive an instant message sent from many computers or mobile applications in diverse places. The server also has the capabilities to separate different messages from different users such that messages are never mixed up, and one user receives the wrong message.

A server also has the capability of identifying a user using predetermined credentials required to access information stored in the server for a given user. Usually, credentials may be in the form of the name of the user and a password. The user must have first created an account for him/her before accessing information stored in the server. This is an important security feature to safeguard data privacy.

The means of connectivity is the power linking a server to a chat application on the mobile phone or the computer. One might have the message already composed of the chat application and also have a dedicated application server. The internet is the connectivity component required to transmit information between the application and the application server.

In this next part, we discuss how information is relayed between the chat application and the application server once a steady internet connection has been established.

The following is the communication process of a chat application:

The initial connection between the chat application and the application server will have the server identify the new connection as a new user. The user’s identity is still anonymous until the required access-credentials, as generated from the chat application, can be remitted to the server for authentication and storage.

This user-identification level is sufficient to support communication where the users are many, and their identities need not be revealed to other users/recipients. However, proper identification will be required when the message is exchanged between one user directly to another user as the server would not be able to determine the recipient of the message without proper identification.

In this stage, users need to identify themselves to the server by keying credentials of their usernames and passwords. The username and passwords are created during the sign-up stage after the chat application has been installed on the phone or the personal computer.

The application server gives a unique tracking/mapping code to a user upon correct user identification. Tracking of individual users is uniquely done per user. As more users register and access the server, such that messages are not mixed up, and one user receives information meant for another user.

This is the main objective of all other processes. The process of exchanging information begins when the sender types and sends the message from the message application endpoint. The server receives the message. The received message and the sender of the message are also distinctly identified.

The server disseminates the received information to the recipient according to commands from the point of sending. The connection to the recipient of the message is identified and labeled differently to the sender’s connection such that the message does not end up in the wrong destinations.

The main limitation of real-time chat applications is that there is limited interoperability between servers belonging to different chat applications. For instance, one cannot send a message from WhatsApp Messenger to Facebook Messenger directly and in real-time. This is because the server for the two chat companies is also different.

Exchange of information on chat applications in real-time is very critical for social and business networking. The message is received instantaneously, provided a stable internet connection between the users and the servers. The simplicity of data exchange from chat applications is made possible by a complex network of programs and processes between message applications and application servers.

The post How a Real-Time Chat Application Works appeared first on The Crazy Programmer.

Recently, the Discount Rules for WooCommerce Plugin has made it to the news owing to multiple vulnerabilities. Exploiting these flaws

Numerous Vulnerabilities Found In Discount Rules for WooCommerce Plugin on Latest Hacking News.

As ransomware attacks continue to target the educational sector, the University of Utah has emerged as the recent victim to

University Of Utah Suffered Ransomware Attack – Paid Ransom To Recover on Latest Hacking News.

Asynchrony in any programming language is hard. Concepts like concurrency, parallelism, and deadlocks make even the most seasoned engineers shiver. Code that executes asynchronously is unpredictable and difficult to trace when there are bugs. The problem is inescapable because modern computing has multiple cores. There’s a thermal limit in each single core of the CPU, and nothing is getting any faster. This puts pressure on the developer to write efficient code that takes advantage of the hardware.

JavaScript is single-threaded, but does this limit Node from utilizing modern architecture? One of the biggest challenges is dealing with multiple threads because of its inherent complexity. Spinning up new threads and managing context switch in between is expensive. Both the operating system and the programmer must do a lot of work to deliver a solution that has many edge cases. In this take, I’ll show you how Node deals with this quagmire via the event loop. I’ll explore every part of the Node.js event loop and demonstrate how it works. One of the “killer app” features in Node is this loop because it solved a hard problem in a radical new way.

The event loop is a single-threaded, non-blocking, and asynchronously concurrent loop. For those without a computer science degree, imagine a web request that does a database lookup. A single thread can only do one thing at a time. Instead of waiting on the database to respond, it continues to pick up other tasks in the queue. In the event loop, the main loop unwinds the call stack and doesn’t wait on callbacks. Because the loop doesn’t block, it’s free to work on more than one web request at a time. Multiple requests can get queued at the same time, which makes it concurrent. The loop doesn’t wait for everything from one request to complete, but picks up callbacks as they come without blocking.

The loop itself is semi-infinite, meaning if the call stack or the callback queue are empty it can exit the loop. Think of the call stack as synchronous code that unwinds, like console.log, before the loop polls for more work. Node uses libuv under the covers to poll the operating system for callbacks from incoming connections.

You may be wondering, why does the event loop execute in a single thread? Threads are relatively heavy in memory for the data it needs per connection. Threads are operating system resources that spin up, and this doesn’t scale to thousands of active connections.

Multiple threads in general also complicate the story. If a callback comes back with data, it must marshal context back to the executing thread. Context switching between threads is slow, because it must synchronize current state like the call stack or local variables. The event loop crushes bugs when multiple threads share resources, because it’s single-threaded. A single-threaded loop cuts thread-safety edge cases and can context switch much faster. This is the real genius behind the loop. It makes effective use of connections and threads while remaining scalable.

Enough theory; time to see what this looks like in code. Feel free to follow along in a REPL or download the source code.

The biggest question the event loop must answer is whether the loop is alive. If so, it figures out how long to wait on the callback queue. At each iteration, the loop unwinds the call stack, then polls.

Here’s an example that blocks the main loop:

setTimeout(

() => console.log('Hi from the callback queue'),

5000); // Keep the loop alive for this long

const stopTime = Date.now() + 2000;

while (Date.now() < stopTime) {} // Block the main loop

If you run this code, note the loop gets blocked for two seconds. But the loop stays alive until the callback executes in five seconds. Once the main loop unblocks, the polling mechanism figures out how long it waits on callbacks. This loop dies when the call stack unwinds and there are no more callbacks left.

Now, what happens when I block the main loop and then schedule a callback? Once the loop gets blocked, it doesn’t put more callbacks on the queue:

const stopTime = Date.now() + 2000;

while (Date.now() < stopTime) {} // Block the main loop

// This takes 7 secs to execute

setTimeout(() => console.log('Ran callback A'), 5000);

This time the loop stays alive for seven seconds. The event loop is dumb in its simplicity. It has no way of knowing what might get queued in the future. In a real system, incoming callbacks get queued and execute as the main loop is free to poll. The event loop goes through several phases sequentially when it’s unblocked. So, to ace that job interview about the loop, avoid fancy jargon like “event emitter” or “reactor pattern”. It’s a humble single-threaded loop, concurrent, and non-blocking.

To avoid blocking the main loop, one idea is to wrap synchronous I/O around async/await:

const fs = require('fs');

const readFileSync = async (path) => await fs.readFileSync(path);

readFileSync('readme.md').then((data) => console.log(data));

console.log('The event loop continues without blocking...');

Anything that comes after the await comes from the callback queue. The code reads like synchronously blocking code, but it doesn’t block. Note async/await makes readFileSync thenable, which takes it off the main loop. Think of anything that comes after await as non-blocking via a callback.

Full disclosure: the code above is for demonstration purposes only. In real code, I recommend fs.readFile, which fires a callback that can be wrapped around a promise. The general intent is still valid, because this takes blocking I/O off the main loop.

Continue reading The Node.js Event Loop: A Developer’s Guide to Concepts & Code on SitePoint.

A serious vulnerability exists in Google Drive that still awaits a fix. As discovered, the vulnerability allows an adversary to

Google Drive Vulnerability Allows Spearphishing Attacks on Latest Hacking News.

Several services from the Canadian government, including the national revenue agency, had to be shut down following a series of credential stuffing cyberattacks.

The post Cyber attacks: Several Canadian government services disrupted appeared first on WeLiveSecurity

From keeping your account safe to curating who can view your liked content, we look at how you can increase your security and privacy on TikTok

The post How to secure your TikTok account appeared first on WeLiveSecurity

One surprising difference between Deno and Node.js is the number of tools built into the runtime. Other than a Read-Eval-Print Loop (REPL) console, Node.js requires third-party modules to handle most indirect coding activities such as testing and linting. Deno provides almost everything you need out of the box.

Before we begin, a note. Deno is new! Use these tools with caution. Some may be unstable. Few have configuration options. Others may have undesirable side effects such as recursively processing every file in every subdirectory. It’s best to test tools from a dedicated project directory.

Install Deno on macOS or Linux using the following terminal command:

curl -fsSL https://deno.land/x/install/install.sh | sh

Or from Windows Powershell:

iwr https://deno.land/x/install/install.ps1 -useb | iex

Further installation options are provided in the Deno manual.

Enter deno --version to check installation has been successful. The version numbers for the V8 JavaScript engine, TypeScript compiler, and Deno itself are displayed.

Upgrade Deno to the latest version with:

deno upgrade

Or upgrade to specific release such as v1.3.0:

deno upgrade --version 1.30.0

Most of the tools below are available in all versions, but later editions may have more features and bug fixes.

A list of tools and options can be viewed by entering:

deno help

Like Node.js, a REPL expression evaluation console can be accessed by entering deno in your terminal. Each expression you enter returns a result or undefined:

$ deno

Deno 1.3.0

exit using ctrl+d or close()

> const w = 'World';

undefined

> w

World

> console.log(`Hello ${w}!`);

Hello World!

undefined

> close()

$

Previously entered expressions can be re-entered by using the cursor keys to navigate through the expression history.

A tree of all module dependencies can be viewed by entering deno info <module> where <module> is the path/URL to an entry script.

Consider the following lib.js library code with exported hello and sum functions:

// general library: lib.js

/**

* return "Hello <name>!" string

* @module lib

* @param {string} name

* @returns {string} Hello <name>!

*/

export function hello(name = 'Anonymous') {

return `Hello ${ name.trim() }!`;

};

/**

* Returns total of all arguments

* @module lib

* @param {...*} args

* @returns {*} total

*/

export function sum(...args) {

return [...args].reduce((a, b) => a + b);

}

These can be used from a main entry script, index.js, in the same directory:

// main entry script: index.js

// import lib.js modules

import { hello, sum } from './lib.js';

const

spr = sum('Site', 'Point', '.com', ' ', 'reader'),

add = sum(1, 2, 3);

// output

console.log( hello(spr) );

console.log( 'total:', add );

The result of running deno run ./index.js:

$ deno run ./index.js

Hello SitePoint.com reader!

total: 6

The dependencies used by index.js can be examined with deno info ./index.js:

$ deno info ./index.js

local: /home/deno/testing/index.js

type: JavaScript

deps:

file:///home/deno/testing/index.js

└── file:///home/deno/testing/lib.js

Similarly, the dependencies required by any module URL can be examined, although be aware the module will be downloaded and cached locally on first use. For example:

$ deno info https://deno.land/std/hash/mod.ts

Download https://deno.land/std/hash/mod.ts

Download https://deno.land/std@0.65.0/hash/mod.ts

Download https://deno.land/std@0.65.0/hash/_wasm/hash.ts

Download https://deno.land/std@0.65.0/hash/hasher.ts

Download https://deno.land/std@0.65.0/hash/_wasm/wasm.js

Download https://deno.land/std@0.65.0/encoding/hex.ts

Download https://deno.land/std@0.65.0/encoding/base64.ts

deps:

https://deno.land/std/hash/mod.ts

└─┬ https://deno.land/std@0.65.0/hash/_wasm/hash.ts

├─┬ https://deno.land/std@0.65.0/hash/_wasm/wasm.js

│ └── https://deno.land/std@0.65.0/encoding/base64.ts

├── https://deno.land/std@0.65.0/encoding/hex.ts

└── https://deno.land/std@0.65.0/encoding/base64.ts

For further information, see the Deno Manual: Dependency Inspector.

Deno provides a linter to validate JavaScript and TypeScript code. This is an unstable feature which requires the --unstable flag, but no files will be altered when it’s used.

Linting is useful to spot less obvious syntax errors and ensure code adheres with your team’s standards. You may already be using a linter such as ESLint in your editor or from the command line, but Deno provides another option in any environment where it’s installed.

To recursively lint all .js and .ts files in the current and child directories, enter deno lint --unstable:

$ deno lint --unstable

(no-extra-semi) Unnecessary semicolon.

};

^

at /home/deno/testing/lib.js:13:1

Found 1 problem

Alternatively, you can specify one or more files to limit linting. For example:

$ deno lint --unstable ./index.js

$

For further information, see the Deno Manual: Linter. It includes a list of rules you can add to code comments to ignore or enforce specific syntaxes.

Deno has a built-in test runner for unit-testing JavaScript or TypeScript functions.

Tests are defined in any file named <something>test with a .js, .mjs, .ts, .jsx, or .tsx extension. It must make one or more calls to Deno.test and pass a test name string and a testing function. The function can be synchronous or asynchronous and use a variety of assertion utilities to evaluate results.

Create a new test subdirectory with a file named lib.test.js:

// test lib.js library

// assertions

import { assertEquals } from 'https://deno.land/std/testing/asserts.ts';

// lib.js modules

import { hello, sum } from '../lib.js';

// hello function

Deno.test('lib/hello tests', () => {

assertEquals( hello('Someone'), 'Hello Someone!');

assertEquals( hello(), 'Hello Anonymous!' );

});

// sum integers

Deno.test('lib/sum integer tests', () => {

assertEquals( sum(1, 2, 3), 6 );

assertEquals( sum(1, 2, 3, 4, 5, 6), 21 );

});

// sum strings

Deno.test('lib/sum string tests', () => {

assertEquals( sum('a', 'b', 'c'), 'abc' );

assertEquals( sum('A', 'b', 'C'), 'AbC' );

});

// sum mixed values

Deno.test('lib/sum mixed tests', () => {

assertEquals( sum('a', 1, 2), 'a12' );

assertEquals( sum(1, 2, 'a'), '3a' );

assertEquals( sum('an', null, [], 'ed'), 'annulled' );

});

To run all tests from all directories, enter deno test. Or run tests stored in a specific directory with deno test <dir>. For example:

$ deno test ./test

running 4 tests

test lib/hello tests ... ok (4ms)

test lib/sum integer tests ... ok (2ms)

test lib/sum string tests ... ok (2ms)

test lib/sum mixed tests ... ok (2ms)

test result: ok. 4 passed; 0 failed; 0 ignored; 0 measured; 0 filtered out (11ms)

$

A --filter string or regular expression can also be specified to limit tests by name. For example:

$ deno test --filter "hello" ./test

running 1 tests

test lib/hello tests ... ok (4ms)

test result: ok. 1 passed; 0 failed; 0 ignored; 0 measured; 3 filtered out (5ms)

Longer-running tests can be stopped on the first failure by passing a --failfast option.

For further information, see the Deno Manual: Testing. A few third-party test modules are also available, including Merlin and Ruhm, but these still use Deno.test beneath the surface.

Continue reading How to Use Deno’s Built-in Tools on SitePoint.

Should I choose Angular or React? Each framework has a lot to offer and it’s not easy to choose between them. Whether you’re a newcomer trying to figure out where to start, a freelancer picking a framework for your next project, or an enterprise-grade architect planning a strategic vision for your company, you’re likely to benefit from having an educated view on this topic.

To save you some time, let me tell you something up front: this article won’t give a clear answer on which framework is better. But neither will hundreds of other articles with similar titles. I can’t tell you that, because the answer depends on a wide range of factors which make a particular technology more or less suitable for your environment and use case.

Since we can’t answer the question directly, we’ll attempt something else. We’ll compare Angular and React, to demonstrate how you can approach the problem of comparing any two frameworks in a structured manner on your own and tailor it to your environment. You know, the old “teach a man to fish” approach. That way, when both are replaced by a BetterFramework.js in a year’s time, you’ll be able to re-create the same train of thought once more.

We’ve just overhauled this guide to reflect the state of React, Angular, and their respective advantages and disadvantages in 2020.

Before you pick any tool, you need to answer two simple questions: “Is this a good tool per se?” and “Will it work well for my use case?” Neither of them mean anything on their own, so you always need to keep both of them in mind. All right, the questions might not be that simple, so we’ll try to break them down into smaller ones.

Questions on the tool itself:

Questions for self-reflection:

Using this set of questions, you can start your assessment of any tool, and we’ll base our comparison of React and Angular on them as well.

There’s another thing we need to take into account. Strictly speaking, it’s not exactly fair to compare Angular to React, since Angular is a full-blown, feature-rich framework, while React just a UI component library. To even the odds, we’ll talk about React in conjunction with some of the libraries often used with it.

An important part of being a skilled developer is being able to keep the balance between established, time-proven approaches and evaluating new bleeding-edge tech. As a general rule, you should be careful when adopting tools that haven’t yet matured due to certain risks:

React is developed and maintained by Facebook and used in their products, including Instagram and WhatsApp. It has been around for around since 2013, so it’s not exactly new. It’s also one of the most popular projects on GitHub, with more than 150,000 stars at the time of writing. Some of the other notable companies using React are Airbnb, Uber, Netflix, Dropbox, and Atlassian. Sounds good to me.

Angular has been around since 2016, making it slightly younger than React, but it’s also not a new kid on the block. It’s maintained by Google and, as mentioned by Igor Minar, even in 2018 was used in more than 600 hundred applications in Google such as Firebase Console, Google Analytics, Google Express, Google Cloud Platform and more. Outside of Google, Angular is used by Forbes, Upwork, VMWare, and others.

Like I mentioned earlier, Angular has more features out of the box than React. This can be both a good and a bad thing, depending on how you look at it.

Both frameworks share some key features in common: components, data binding, and platform-agnostic rendering.

Angular provides a lot of the features required for a modern web application out of the box. Some of the standard features are:

@angular/router@angular/common/http@angular/forms for building formsSome of these features are built into the core of the framework and you don’t have an option not to use them. This requires developers to be familiar with features such as dependency injection to build even a small Angular application. Other features such as the HTTP client or forms are completely optional and can be added on an as-needed basis.

With React, you’re starting with a more minimalistic approach. If we’re looking at just React, here’s what we have:

Out of the box, React does not provide anything for dependency injection, routing, HTTP calls, or advanced form handling. You are expected to choose whatever additional libraries to add based on your needs which can be both a good and a bad thing depending on how experienced you are with these technologies. Some of the popular libraries that are often used together with React are:

The teams I’ve worked with have found the freedom of choosing your libraries liberating. This gives us the ability to tailor our stack to particular requirements of each project, and we haven’t found the cost of learning new libraries that high.

Continue reading React vs Angular: An In-depth Comparison on SitePoint.

A serious spoofing vulnerability affected Google’s Gmail service. However, despite discovery and responsible disclosure, the tech giant delivered the fix

Google Fixed A Gmail Vulnerability Just 7 hours From Public Disclosure on Latest Hacking News.

Taking another step towards security, the cybersecurity firm FireEye has publicly launched its bug bounty program. While the program initially

FireEye Bug Bounty Program Now Available To The Public on Latest Hacking News.

These days, it is essential to ensure your website is optimized as possible with everybody across the globe self-isolation. After all, you wish individuals to visit your site and stick around, right?

You can easily personalize any part of your site with WordPress, and that involves the theme you utilize. Nevertheless, changing its code directly could be dangerous. There’s a chance you might lose your modifications once you update it. But when you employ a child theme, you won’t run any of such risks.

Did you know that a child theme is a copy of its ‘parent?’ You could easily create one easily and edit your theme efficiently and safely. If you are looking for a guide on how you can create and customize a WordPress child theme, this post got you covered.

A child theme is a model bringing in the overall arrangement of its parent. In short, it is a duplicate of the original. You can only make modifications to it without influencing the last.

Do you wish to change the functions.php of your theme file to include a new feature? You can dig into that code and do the needed modifications. Nonetheless, soon after, a new version of your theme is presented. You leap at the possibility of installing it because it is smart to update your WordPress components.

Upgrading the theme invalidate the changes you have done to its primary files. The new feature you included vanished, and you need to begin from scratch again.

On the other hand, you can make a child theme and modify its functions.php file as an alternative. You will use it to the parent theme. Your modifications will then be restored every time an update is introduced.

Keep in mind that the steps in this guide could be done on your server through FTP client. Nonetheless, it would help if you set up everything locally. You can then zip the folder of the theme. After that, make sure you install it like a standard theme through the Theme menu.

A child theme must have a style sheet, folder, and a functions.php and its own folder. You can find your theme in wp-content/themes in your WP installation. Go there and make a new folder. Remember the name and make a new directory.

You can do the stages in this guide with any parent theme you wish. The name you create for its child does not make any technical difference either. Nonetheless, it would best if it is something, which is simple to recall.

The next step you need to do is to create the file that WP uses to identify new themes. It’s referred to as style.css. It has all the CSS styles for your website.

Make a new file in the child theme directory and designate it style.css. Right-click on it and choose View/Edit after it is ready.

That will open your file with the local text editor. It must empty now. You can copy the code and paste it in the style.css file of the theme. You can then modify the placeholders.

Change every instance of the name of the parent theme with the one you are utilizing. You will also like to add your domain and details. Save the alterations to style.css and exit it.

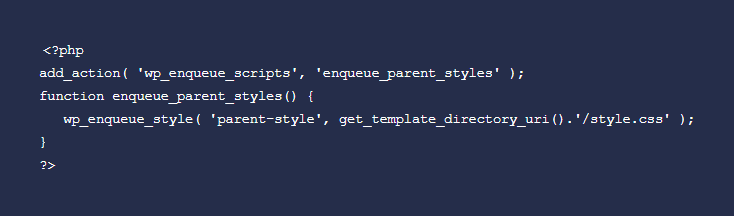

You must import all the code, which makes it work for the child theme to keep the functionality and style of the parent. You can achieve that through enqueueing.

Set up the new file known as functions.php in the directory of the child theme to get started. Open and paste these codes:

Save your changes functions.php. You will then see that this code does not identify something about the parent theme. The reason behind that is we determined the template it should import when you edited the style.css file.

Copy and paste the content of the functions.php file of the parent theme. Nonetheless, you would need to change the codebase automatically with that tactic every time an update was introduced.

Enqueueing the scripts and styles of the parent theme will enable you to bypass that problem. The theme should start running after that. All that is left now is to personalize and activate it.

Doing modifications to your child theme works very similar to editing any other. For instance, you can personalize the style of your website by changing its style.css file. Simply add a new CSS code you wish under the theme description you created earlier.

Take note that any modifications you create will overrule contradictory functions or styles from the parent theme. That goes the same for functions.php and style.css, among other files you set up.

You must see the child theme listed if you go to your dashboard and navigate to Appearance > Themes.

Keep in mind that activating the child theme works similarly to others. You can now go ahead and check your homepage after you click on the Activate button.

In short, your website must look similarly as it did before if you’re formerly using the parent theme. You will not see any difference between the two until you begin making modifications.

You should remember that you couldn’t remove the parent files while you are employing a child theme. Or else, it will not be able to import the functions or styles it requires to run correctly.

Similarly, you will like to check for any updates accessible for the parent theme. Only because you are utilizing a child theme does not suggest you are exempted from security best practices.

Are you planning to include new functions to your WordPress theme? Then bear in mind that the most efficient and safest way to do so is with a child theme. Luckily, creating and installing one is not a challenging and difficult task.

Do you find this post helpful? What are your thoughts about this guide?

The post How to Make and Customize a WordPress Child Theme appeared first on The Crazy Programmer.

Japanese technology giant Konica Minolta has now fallen prey to a cyber attack. Konica Minolta has suffered a ransomware attack,

Konica Minolta Attacked By A New Ransomware on Latest Hacking News.

Hello, Programmers! Many of us have already worked with Python and at some point in time we all have faced this error of “Python is not recognized as an internal or external command”.

Now, this error is shown to us mainly because of two reasons. Either your system don’t have python installed or maybe it is installed but the path of Python is not configured properly.

In this post, we’ll see both the reasons for this error and we’ll try to fix this.

Sometimes we don’t have python installed on our system but when we try to run python from the command line or the Powershell, this throws us an error of Python is not recognized.

Now to overcome this error, open your browser and download the latest version of Python for your PC and install it on your system.

While installing, don’t forget to check the box that says, Add Python to PATH. This will install and also set the path for you at the same time.

Not open you command prompt or the Powershell and type Python and this will open the python shell in that command prompt or that Powershell.

If this was the reason for your “Python is not recognized” problem, I guess you got your solution.

Next step is for those who already installed Python on their PC but still, it is not showing it in your command prompt or the Powershell.

This is one of the reasons why many of us get this error. We install our Python but we forget to set the path of Python and thus it throws us an error.

Program Files folder.Now click ok and you are done with the path setup.

You can now try to open command prompt and type python and hit enter. This will show the version of python installed and a python shell will be opened in that command prompt.

If you have found this post helpful, please share it with your friends or colleagues who are looking for some python programming.

And if you have started with Python development and stuck in some kind of problem or bug, you can leave your comment here and we will get back to you soon.

The post Solved: Python is not recognized as an internal or external command appeared first on The Crazy Programmer.

This week, ESET researchers analyze fraud emails from the infamous Grandoreiro banking Trojan, impersonating the Agencia Tributaria, Spain’s tax agency. Our security expert Jake Moore demonstrates how easily it is to clone an Instagram account and lure people to give money; learn how to protect yourself. Finally, have you thought about what will happen to your

The post Week in security with Tony Anscombe appeared first on WeLiveSecurity

Beware the tax bogeyman – there are tax scams aplenty

The post Grandoreiro banking trojan impersonates Spain’s tax agency appeared first on WeLiveSecurity

Researchers have come up with a new attack that creates 3D-printed physical keys. Dubbed Spikey, the technique involves listening to

Spikey Attack Can Duplicate Physical Keys By Listening To Click Sounds on Latest Hacking News.

Cisco has recently addressed a serious vulnerability affecting its vWAAS product. Exploiting the bug could give admin rights to an

Cisco Patched Critical Vulnerability In Cisco vWAAS on Latest Hacking News.