This article was originally published on the Okta developer blog. Thank you for supporting the partners who make SitePoint possible.

JavaScript is used everywhere on the web - nearly every web page will include at least some JavaScript, and even if it doesn’t, your browser probably has some sort of extension that injects bits of JavaScript code on to the page anyway. It’s hard to avoid in 2018.

JavaScript can also be used outside the context of a browser, for anything from hosting a web server to controlling an RC car or running a full-fledged operating system. Sometimes you want a couple of servers to talk to each other, whether on a local network or over the internet.

Today, I’ll show you how to create a REST API using Node.js, and secure it with OAuth 2.0 to prevent unwarranted requests. REST APIs are all over the web, but without the proper tools require a ton of boilerplate code. I’ll show you how to use a couple of amazing tools that make it all a breeze, including Okta to implement the Client Credentials Flow, which securely connects two machines together without the context of a user.

Build Your Node Server

Setting up a web server in Node is quite simple using the Express JavaScript library. Make a new folder that will contain your server.

$ mkdir rest-api

Node uses a package.json to manage dependencies and define your project. To create one, use npm init, which will ask you some questions to help you initialize the project. For now, you can use standard JS to enforce a coding standard, and use that as the tests.

$ cd rest-api

$ npm init

This utility will walk you through creating a package.json file.

It only covers the most common items, and tries to guess sensible defaults.

See `npm help json` for definitive documentation on these fields

and exactly what they do.

Use `npm install <pkg>` afterwards to install a package and

save it as a dependency in the package.json file.

Press ^C at any time to quit.

package name: (rest-api)

version: (1.0.0)

description: A parts catalog

entry point: (index.js)

test command: standard

git repository:

keywords:

author:

license: (ISC)

About to write to /Users/Braden/code/rest-api/package.json:

{

"name": "rest-api",

"version": "1.0.0",

"description": "A parts catalog",

"main": "index.js",

"scripts": {

"test": "standard"

},

"author": "",

"license": "ISC"

}

Is this OK? (yes)

The default entry point is index.js, so you should create a new file by that name. The following code will get you a really basic server that doesn’t really do anything but listens on port 3000 by default.

index.js

const express = require('express')

const bodyParser = require('body-parser')

const { promisify } = require('util')

const app = express()

app.use(bodyParser.json())

const startServer = async () => {

const port = process.env.SERVER_PORT || 3000

await promisify(app.listen).bind(app)(port)

console.log(`Listening on port ${port}`)

}

startServer()

The promisify function of util lets you take a function that expects a callback and instead will return a Promise, which is the new standard as far as handling asynchronous code. This also lets us use the relatively new async/await syntax and make our code look much prettier.

In order for this to work, you need to install the dependencies that you require at the top of the file. Add them using npm install. This will automatically save some metadata to your package.json file and install them locally in a node_modules folder.

Note: You should never commit node_modules to source control because it tends to become bloated quickly, and the package-lock.json file will keep track of the exact versions you used to that if you install this on another machine they get the same code.

$ npm install express@4.16.3 util@0.11.0

For some quick linting, install standard as a dev dependency, then run it to make sure your code is up to par.

$ npm install --save-dev standard@11.0.1

$ npm test

> rest-api@1.0.0 test /Users/bmk/code/okta/apps/rest-api

> standard

If all is well, you shouldn’t see any output past the > standard line. If there’s an error, it might look like this:

$ npm test

> rest-api@1.0.0 test /Users/bmk/code/okta/apps/rest-api

> standard

standard: Use JavaScript Standard Style (https://standardjs.com)

standard: Run `standard --fix` to automatically fix some problems.

/Users/Braden/code/rest-api/index.js:3:7: Expected consistent spacing

/Users/Braden/code/rest-api/index.js:3:18: Unexpected trailing comma.

/Users/Braden/code/rest-api/index.js:3:18: A space is required after ','.

/Users/Braden/code/rest-api/index.js:3:38: Extra semicolon.

npm ERR! Test failed. See above for more details.

Now that your code is ready and you have installed your dependencies, you can run your server with node . (the . says to look at the current directory, and then checks your package.json file to see that the main file to use in this directory is index.js):

$ node .

Listening on port 3000

To test that it’s working, you can use the curl command. There are no endpoints yet, so express will return an error:

$ curl localhost:3000 -i

HTTP/1.1 404 Not Found

X-Powered-By: Express

Content-Security-Policy: default-src 'self'

X-Content-Type-Options: nosniff

Content-Type: text/html; charset=utf-8

Content-Length: 139

Date: Thu, 16 Aug 2018 01:34:53 GMT

Connection: keep-alive

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="utf-8">

<title>Error</title>

</head>

<body>

<pre>Cannot GET /</pre>

</body>

</html>

Even though it says it’s an error, that’s good. You haven’t set up any endpoints yet, so the only thing for Express to return is a 404 error. If your server wasn’t running at all, you’d get an error like this:

$ curl localhost:3000 -i

curl: (7) Failed to connect to localhost port 3000: Connection refused

Build Your REST API with Express, Sequelize, and Epilogue

Now that you have a working Express server, you can add a REST API. This is actually much simpler than you might think. The easiest way I’ve seen is by using Sequelize to define your database schema, and Epilogue to create some REST API endpoints with near-zero boilerplate.

You’ll need to add those dependencies to your project. Sequelize also needs to know how to communicate with the database. For now, use SQLite as it will get us up and running quickly.

npm install sequelize@4.38.0 epilogue@0.7.1 sqlite3@4.0.2

Create a new file database.js with the following code. I’ll explain each part in more detail below.

database.js

const Sequelize = require('sequelize')

const epilogue = require('epilogue')

const database = new Sequelize({

dialect: 'sqlite',

storage: './test.sqlite',

operatorsAliases: false

})

const Part = database.define('parts', {

partNumber: Sequelize.STRING,

modelNumber: Sequelize.STRING,

name: Sequelize.STRING,

description: Sequelize.TEXT

})

const initializeDatabase = async (app) => {

epilogue.initialize({ app, sequelize: database })

epilogue.resource({

model: Part,

endpoints: ['/parts', '/parts/:id']

})

await database.sync()

}

module.exports = initializeDatabase

Now you just need to import that file into your main app and run the initialization function. Make the following additions to your index.js file.

index.js

@@ -2,10 +2,14 @@ const express = require('express')

const bodyParser = require('body-parser')

const { promisify } = require('util')

+const initializeDatabase = require('./database')

+

const app = express()

app.use(bodyParser.json())

const startServer = async () => {

+ await initializeDatabase(app)

+

const port = process.env.SERVER_PORT || 3000

await promisify(app.listen).bind(app)(port)

console.log(`Listening on port ${port}`)

You can now test for syntax errors and run the app if everything seems good:

$ npm test && node .

> rest-api@1.0.0 test /Users/bmk/code/okta/apps/rest-api

> standard

Executing (default): CREATE TABLE IF NOT EXISTS `parts` (`id` INTEGER PRIMARY KEY AUTOINCREMENT, `partNumber` VARCHAR(255), `modelNu

mber` VARCHAR(255), `name` VARCHAR(255), `description` TEXT, `createdAt` DATETIME NOT NULL, `updatedAt` DATETIME NOT NULL);

Executing (default): PRAGMA INDEX_LIST(`parts`)

Listening on port 3000

In another terminal, you can test that this is actually working (to format the JSON response I use a json CLI, installed globally using npm install --global json):

$ curl localhost:3000/parts

[]

$ curl localhost:3000/parts -X POST -d '{

"partNumber": "abc-123",

"modelNumber": "xyz-789",

"name": "Alphabet Soup",

"description": "Soup with letters and numbers in it"

}' -H 'content-type: application/json' -s0 | json

{

"id": 1,

"partNumber": "abc-123",

"modelNumber": "xyz-789",

"name": "Alphabet Soup",

"description": "Soup with letters and numbers in it",

"updatedAt": "2018-08-16T02:22:09.446Z",

"createdAt": "2018-08-16T02:22:09.446Z"

}

$ curl localhost:3000/parts -s0 | json

[

{

"id": 1,

"partNumber": "abc-123",

"modelNumber": "xyz-789",

"name": "Alphabet Soup",

"description": "Soup with letters and numbers in it",

"createdAt": "2018-08-16T02:22:09.446Z",

"updatedAt": "2018-08-16T02:22:09.446Z"

}

]

What’s Going On Here?

Feel free to skip this section if you followed along with all that, but I did promise an explanation.

The Sequelize function creates a database. This is where you configure details, such as what dialect of SQL to use. For now, use SQLite to get up and running quickly.

const database = new Sequelize({

dialect: 'sqlite',

storage: './test.sqlite',

operatorsAliases: false

})

Once you’ve created the database, you can define the schema for it using database.define for each table. Create a table called parts with a few useful fields to keep track of parts. By default, Sequelize also automatically creates and updates id, createdAt, and updatedAt fields when you create or update a row.

const Part = database.define('parts', {

partNumber: Sequelize.STRING,

modelNumber: Sequelize.STRING,

name: Sequelize.STRING,

description: Sequelize.TEXT

})

Epilogue requires access to your Express app in order to add endpoints. However, app is defined in another file. One way to deal with this is to export a function that takes the app and does something with it. In the other file when we import this script, you would run it like initializeDatabase(app).

Epilogue needs to initialize with both the app and the database. You then define which REST endpoints you would like to use. The resource function will include endpoints for the GET, POST, PUT, and DELETE verbs, mostly automagically.

To actually create the database, you need to run database.sync(), which returns a Promise. You’ll want to wait until it’s finished before starting your server.

The module.exports command says that the initializeDatabase function can be imported from another file.

const initializeDatabase = async (app) => {

epilogue.initialize({ app, sequelize: database })

epilogue.resource({

model: Part,

endpoints: ['/parts', '/parts/:id']

})

await database.sync()

}

module.exports = initializeDatabase

Secure Your Node + Express REST API with OAuth 2.0

Now that you have a REST API up and running, imagine you’d like a specific application to use this from a remote location. If you host this on the internet as is, then anybody can add, modify, or remove parts at their will.

To avoid this, you can use the OAuth 2.0 Client Credentials Flow. This is a way of letting two servers communicate with each other, without the context of a user. The two servers must agree ahead of time to use a third-party authorization server. Assume there are two servers, A and B, and an authorization server. Server A is hosting the REST API, and Server B would like to access the API.

- Server B sends a secret key to the authorization server to prove who they are and asks for a temporary token.

- Server B then consumes the REST API as usual but sends the token along with the request.

- Server A asks the authorization server for some metadata that can be used to verify tokens.

- Server A verifies the Server B’s request.

- If it’s valid, a successful response is sent and Server B is happy.

- If the token is invalid, an error message is sent instead, and no sensitive information is leaked.

Create an Authorization Server

This is where Okta comes into play. Okta can act as an authorization server to allow you to secure your data. You’re probably asking yourself “Why Okta? Well, it’s pretty cool to build a REST app, but it’s even cooler to build a secure one. To achieve that, you’ll want to add authentication so users have to log in before viewing/modifying groups. At Okta, our goal is to make identity management a lot easier, more secure, and more scalable than what you’re used to. Okta is a cloud service that allows developers to create, edit, and securely store user accounts and user account data, and connect them with one or multiple applications. Our API enables you to:

If you don’t already have one, sign up for a forever-free developer account, and let’s get started!

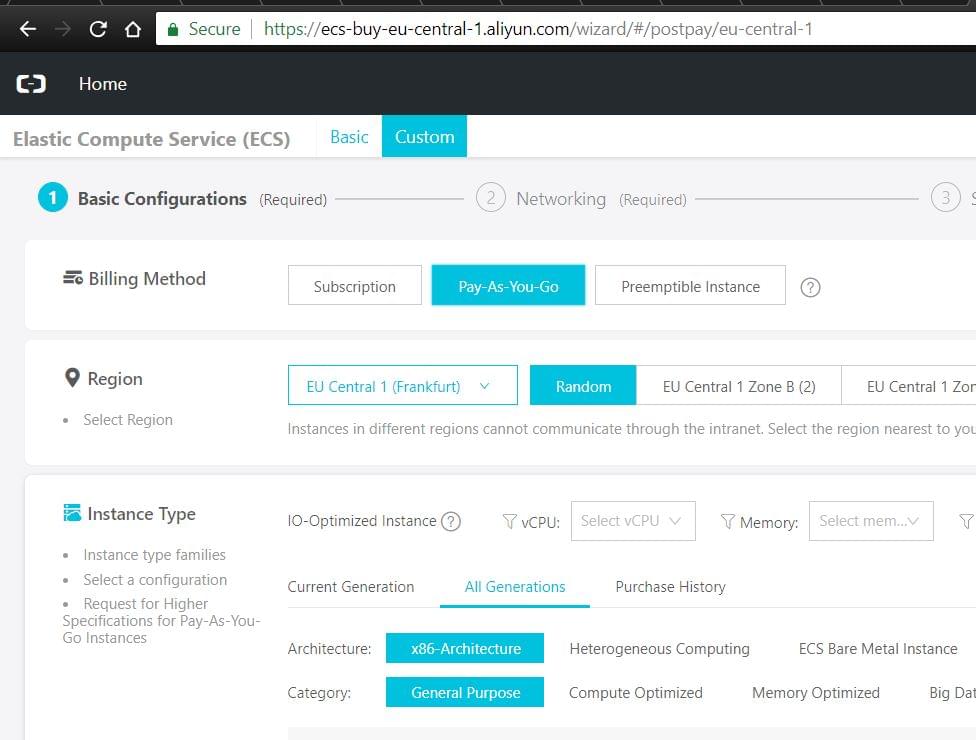

After creating your account, log in to your developer console, navigate to API, then to the Authorization Servers tab. Click on the link to your default server.

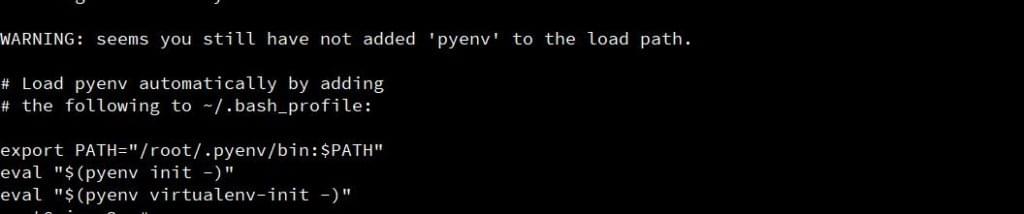

From this Settings tab, copy the Issuer field. You’ll need to save this somewhere that your Node app can read. In your project, create a file named .env that looks like this:

.env

ISSUER=https://{yourOktaDomain}/oauth2/default

The value for ISSUER should be the value from the Settings page’s Issuer URI field.

Note: As a general rule, you should not store this .env file in source control. This allows multiple projects to use the same source code without needing a separate fork. It also makes sure that your secure information is not public (especially if you’re publishing your code as open source).

Next, navigate to the Scopes tab. Click the Add Scope button and create a scope for your REST API. You’ll need to give it a name (e.g. parts_manager) and you can give it a description if you like.

You should add the scope name to your .env file as well so your code can access it.

.env

ISSUER=https://{yourOktaDomain}/oauth2/default

SCOPE=parts_manager

Now you need to create a client. Navigate to Applications, then click Add Application. Select Service, then click Next. Enter a name for your service, (e.g. Parts Manager), then click Done.

The post Build a Simple REST API with Node and OAuth 2.0 appeared first on SitePoint.